Toward Facilitating Search in VR With the Assistance of Vision Large Language Models

Abstract

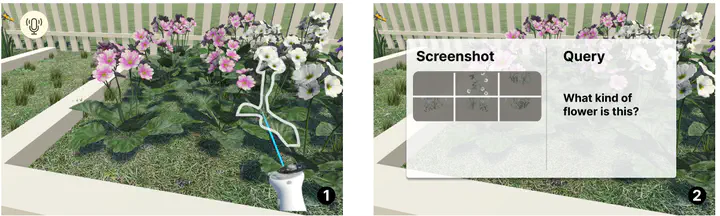

While search is a common need in Virtual Reality (VR) applications, current approaches are cumbersome, often requiring users to type on a mid-air keyboard using controllers in VR or remove VR equipment to search on a computer. We first conducted a literature review and a formative study, identifying six common search needs, knowing about one object, knowing about the object’s partial details, knowing objects with environmental context, knowing about interactions with objects, and finding objects within field of view (FOV) and out of FOV in the VR scene. Informed by these needs, we designed technology probes that leveraged recent advances in Vision Large Language Models and conducted a probe-based study with users to elicit feedback. Based on the findings, we derived design principles for VR designers and developers to consider when designing a user-friendly search interface in VR. While prior work about VR search tended to address specific aspects of search, our work contributes design considerations aimed at enhancing the ease of search in VR and potential future directions.